Developers can now use Gemini in Android Studio to turn visuals into apps with multimodal capabilities.

Hey Android Devs....if you've ever wished your development tools could just "see" what you're trying to build, today's your lucky day! Google has just announced a massive upgrade to Gemini in Android Studio that brings multimodal capabilities to developers' favorite AI assistant. In plain English? Gemini can now look at images you share and understand them, opening up a world of exciting possibilities for Android developers.

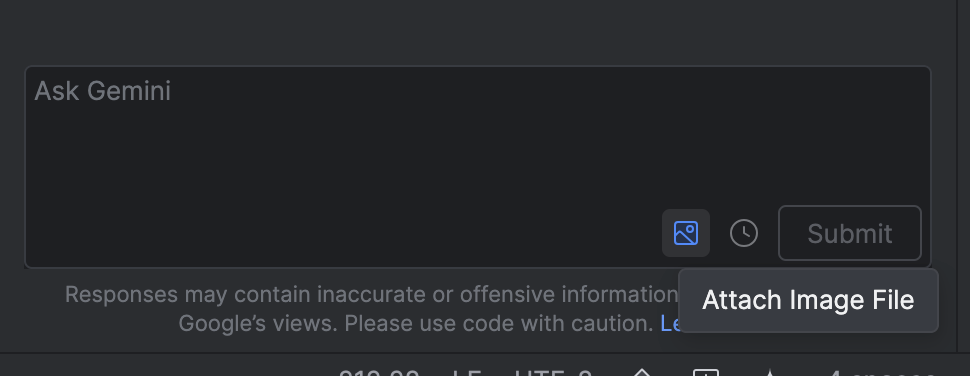

Google has added an "Attach Image File" button to the Gemini chat window in Android Studio. This seemingly simple addition is actually revolutionary—it allows you to upload JPEG or PNG images directly into your conversations with Gemini. The AI can then analyze these visuals and respond intelligently based on what it sees.

This feature is available right now in Android Studio Narwhal, with plans to bring it to stable releases in the future.

Even if you've never written a line of code, this matters because it will significantly impact the apps you use daily. Here's why:

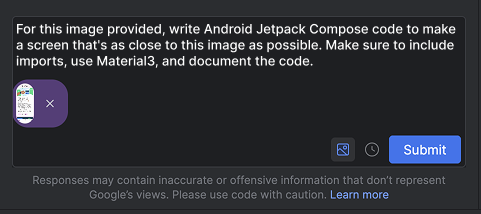

Remember when you had to be both an artist and a programmer to build beautiful apps? Those days are fading fast. Now, developers can sketch a basic wireframe on paper, take a photo, and ask Gemini to transform it into functioning code.

Even more impressively, designers can create high-fidelity mockups in their favorite design tools, and developers can have Gemini convert those directly into working Jetpack Compose code. It's like having a UI developer working alongside you, ready to implement designs as soon as they're ready.

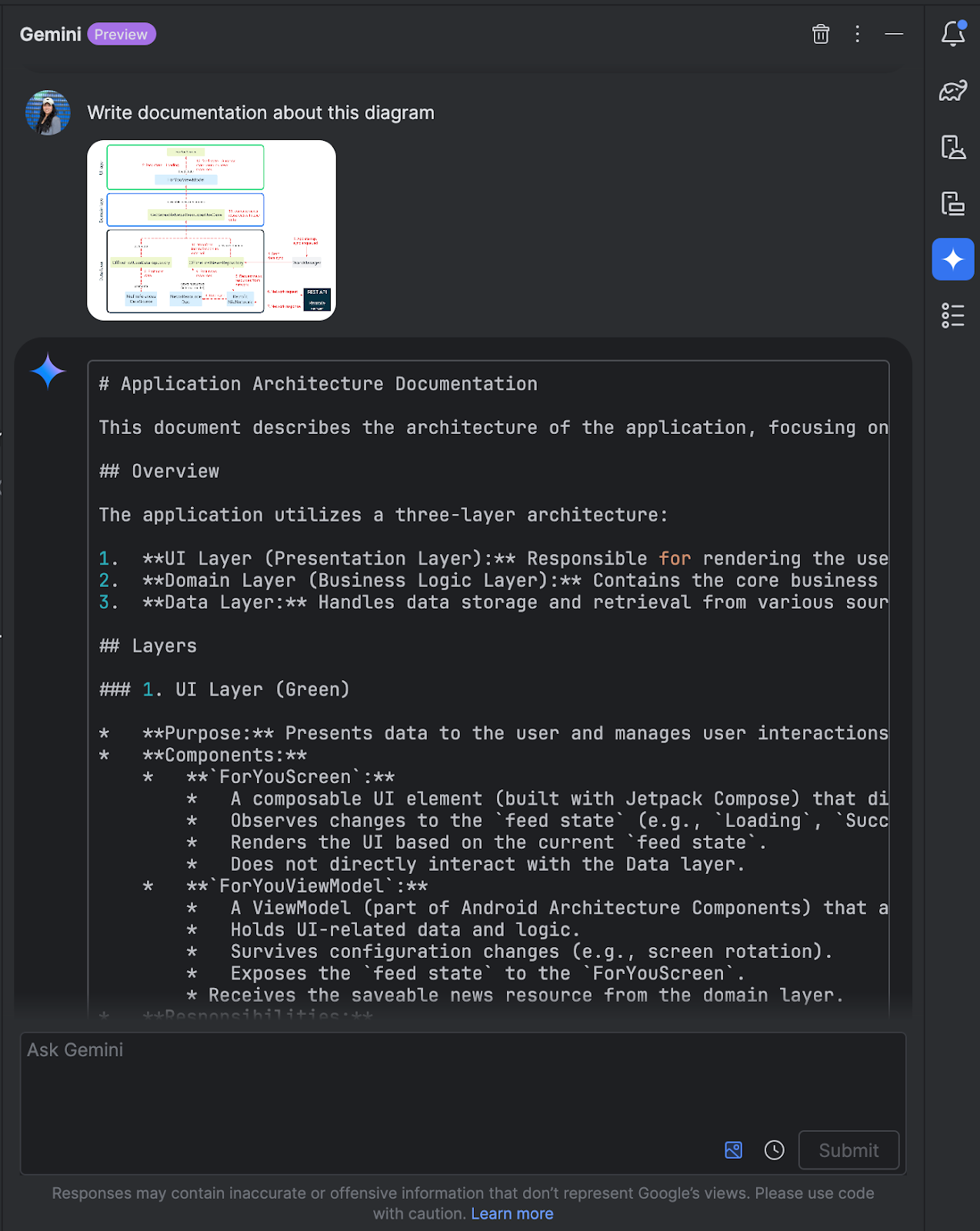

Ever stared at a complex architecture diagram and felt your brain melt a little? Gemini can now help with that too. Developers can upload images of complex system diagrams and ask Gemini to explain what's happening or even generate documentation. This means better team communication and fewer headaches when onboarding new team members.

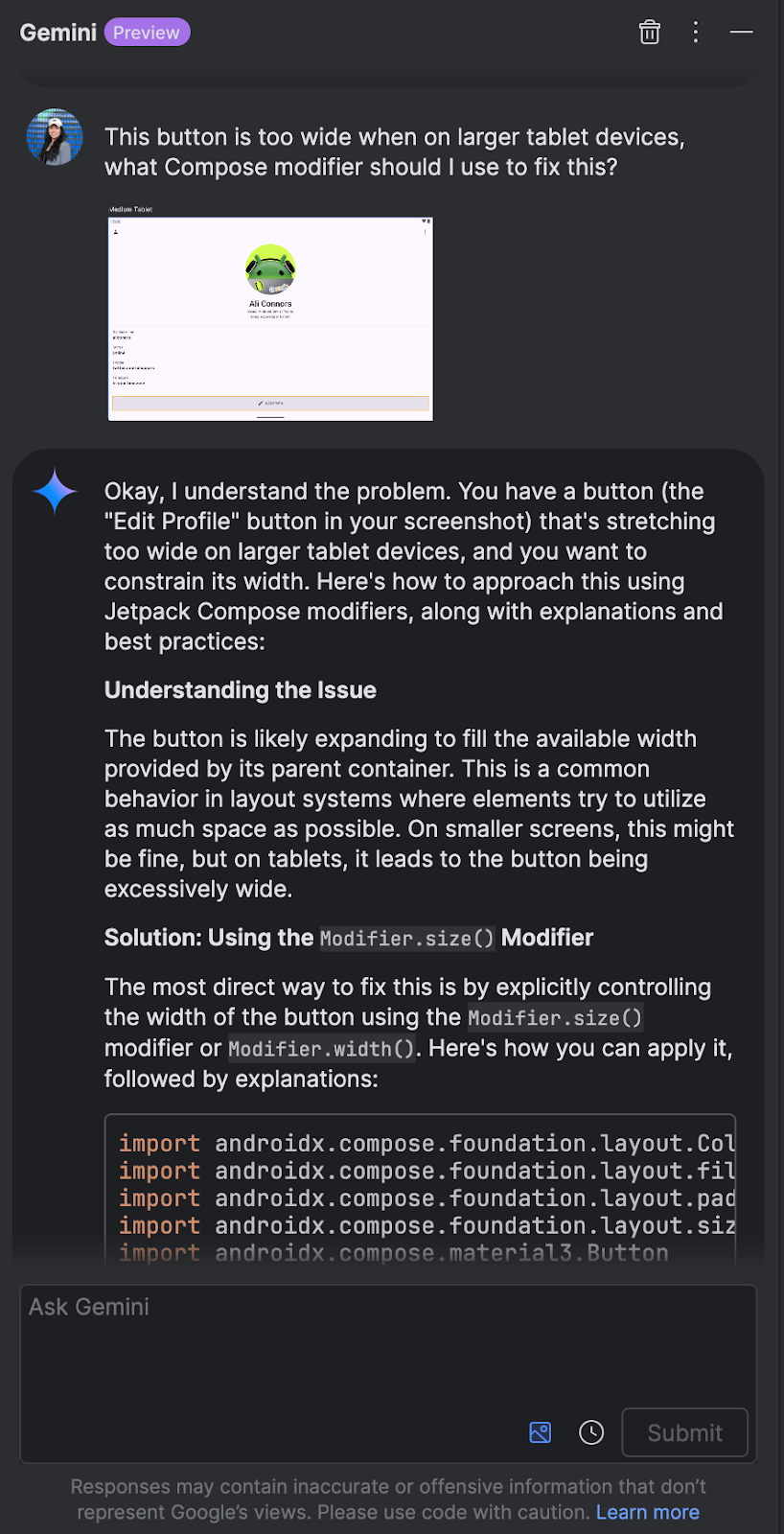

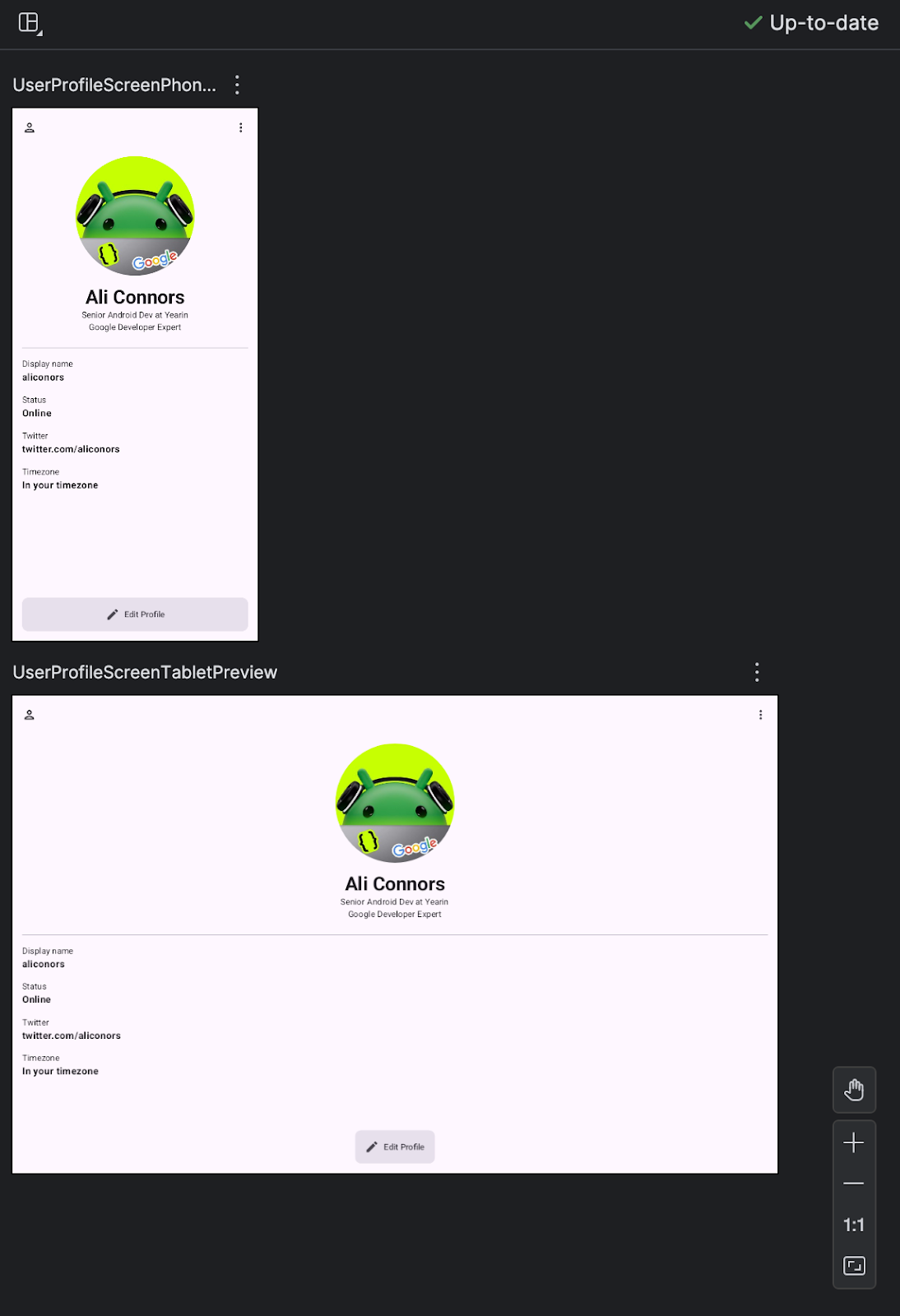

Perhaps most exciting for everyday users—Gemini can now help squash those annoying visual bugs that plague apps. Developers can take screenshots of UI issues (like buttons that stretch awkwardly on tablet screens) and ask Gemini for solutions. The AI can analyze the image and suggest fixes, potentially leading to better-looking apps across different devices.

Of course, there are some limitations. Google notes that Gemini works best with images that have strong color contrasts, and the generated code should be considered a "first draft" that will need developer tweaking. Think of it as an extremely efficient starting point rather than a complete solution.

And for the privacy-conscious among us, Google assures that Android Studio won't send any source code to servers without explicit consent.

This update represents a significant step toward more visual, intuitive programming tools. Rather than translating creative ideas into abstract code, developers can increasingly "show" their tools what they want to build.

For non-developers, the impact might not be immediately visible, but over time, you'll likely notice Android apps becoming more polished, more consistent across devices, and arriving to market more quickly. New developers might also find it easier to create their first apps, potentially bringing fresh ideas and experiences to your device.

In a world where we increasingly communicate through visuals—photos, videos, memes—it only makes sense that our development tools should understand images too. With Gemini's new vision capabilities, Android Studio is taking a major leap in that direction.

Want to try it yourself? Download Android Studio Narwhal today and start uploading those wireframes!