Google's Angana Ghosh explains how Android's Live Captions feature is evolving to recognize things like tone, sighs, and laughter.

Captions are a crucial accessibility feature that millions of people who are deaf or hard of hearing rely on. Even people who don't have hearing loss use captions to understand what's happening in videos, whether it's because they're in a noisy environment or can't make out what's being said. Many videos, especially those shared on social media, lack captions, though, making them inaccessible. Android's Live Caption feature solves that problem by generating captions for audio playing on the device, but it has a problem: It doesn't properly recognize things like tone, vocal bursts, or background noises. That's changing today with Expressive Captions on Android, a new feature of Live Caption that Google says will "not only tell you what someone says, but how they say it."

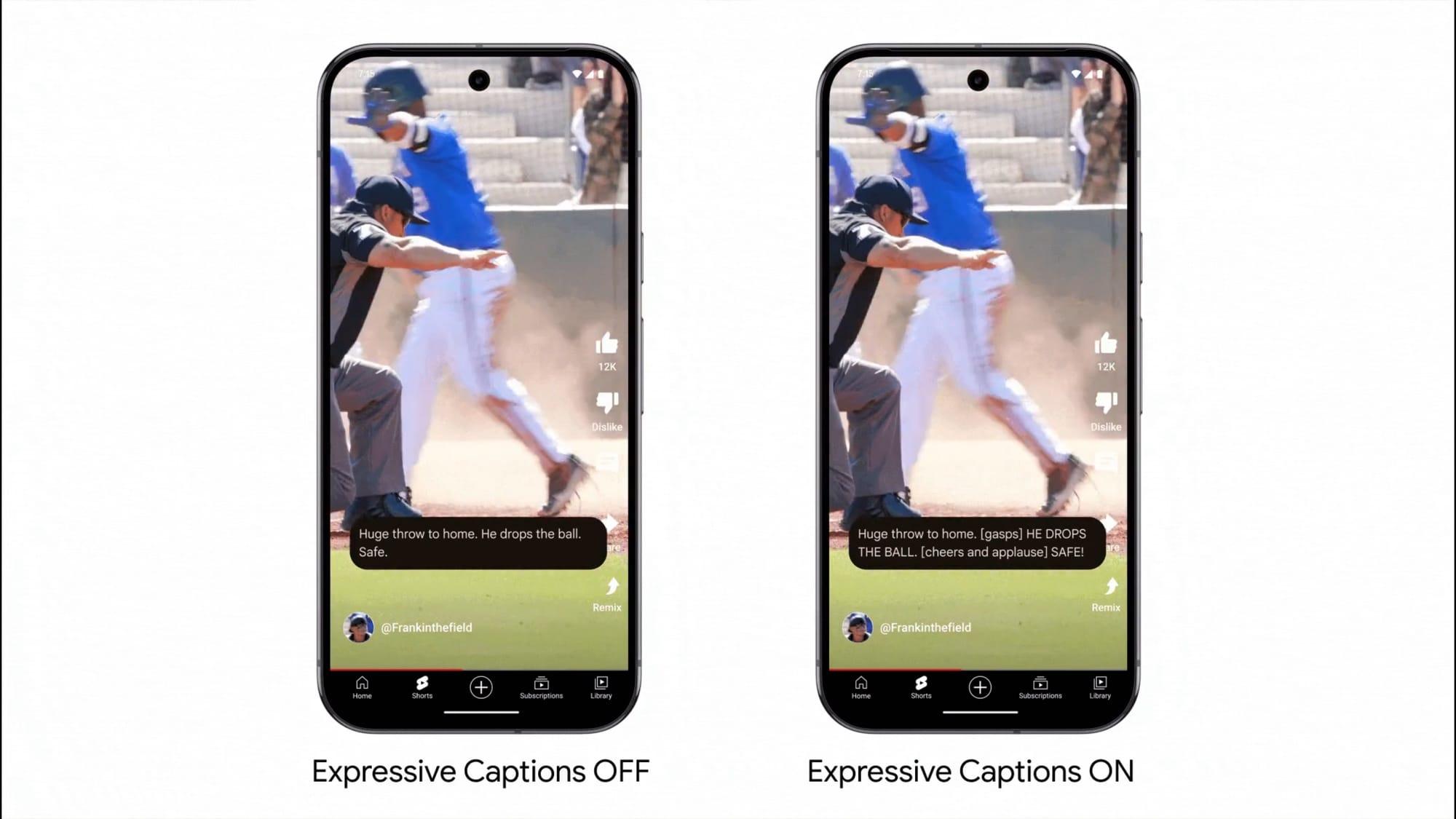

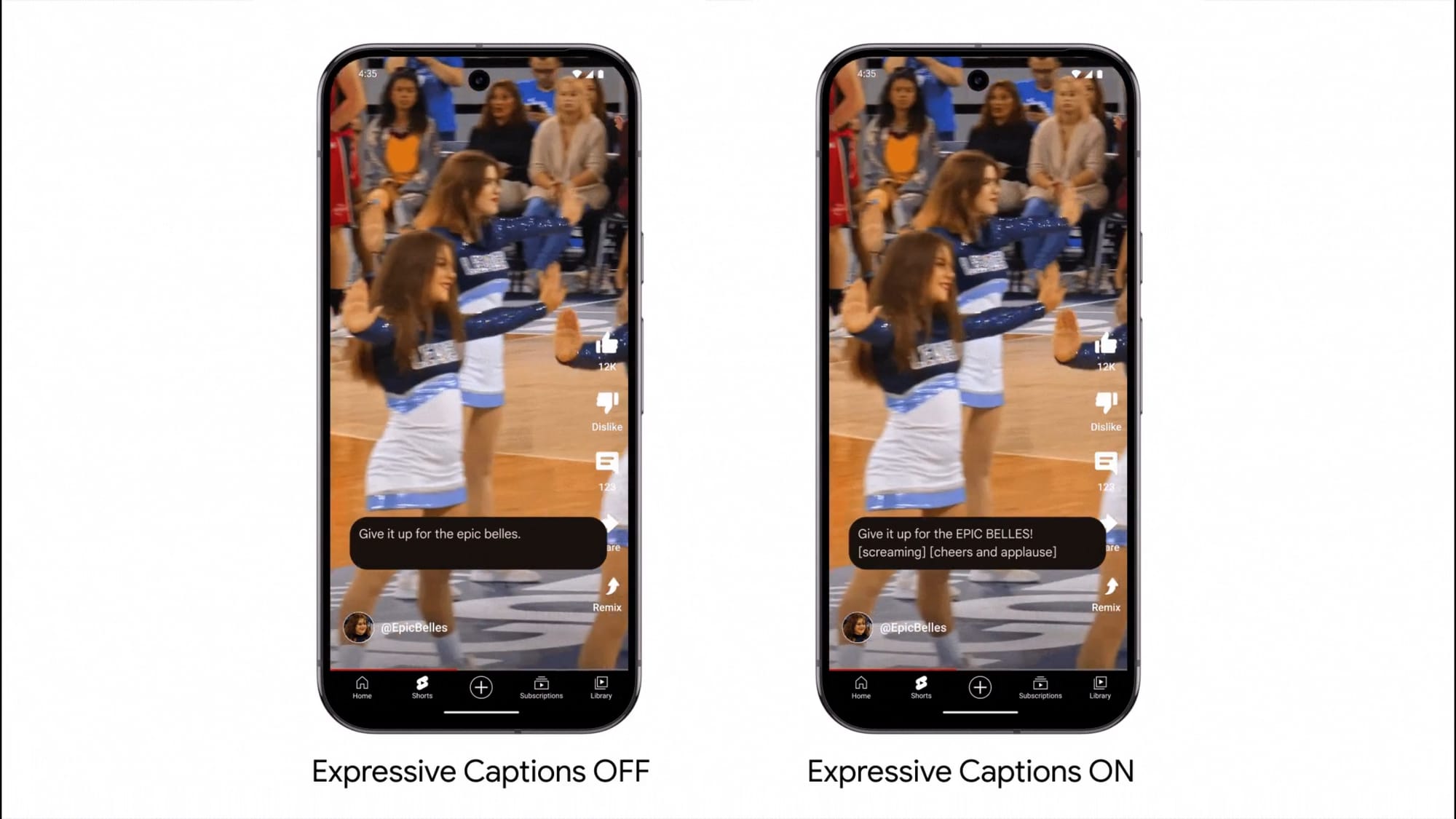

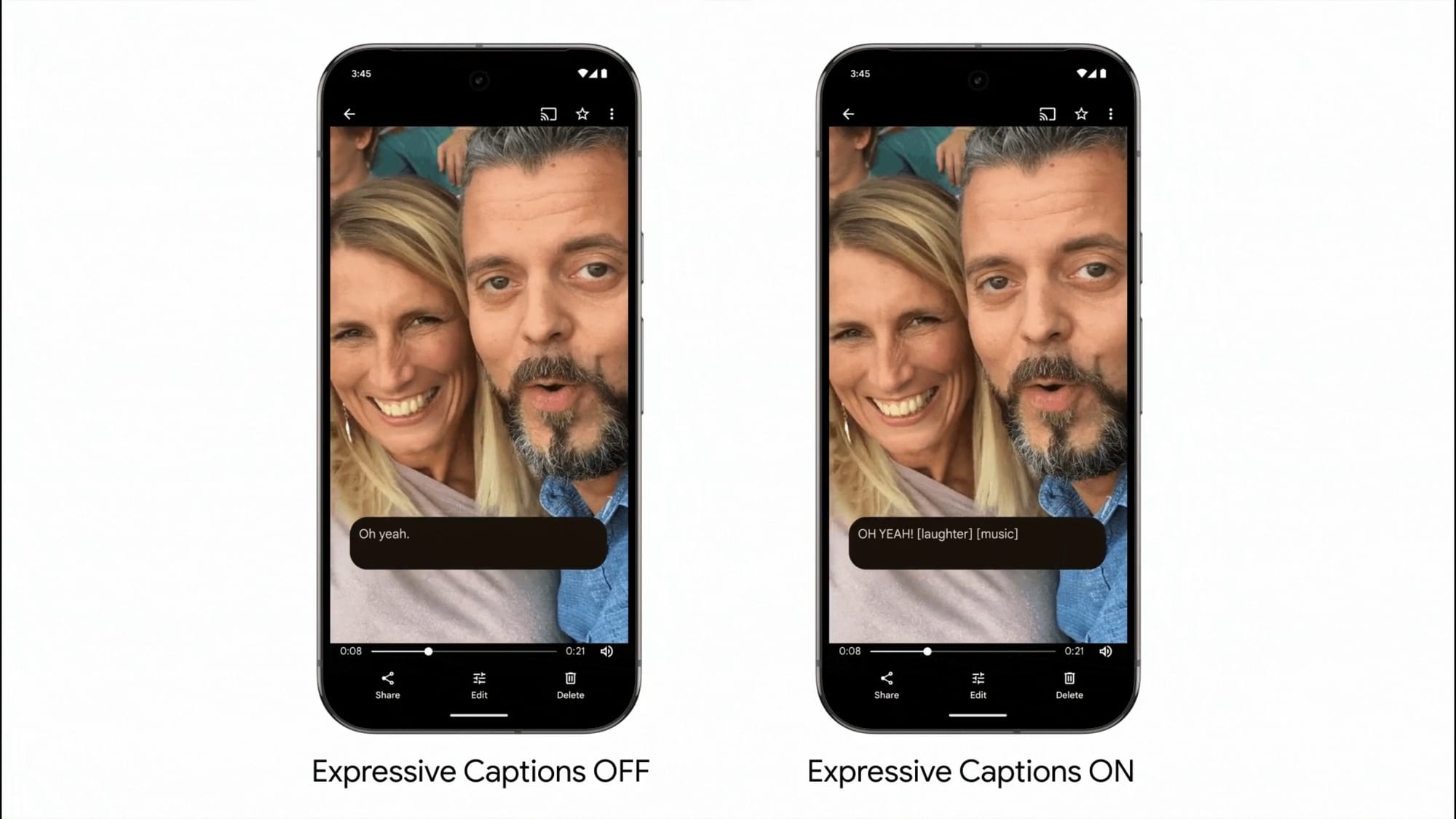

Expressive Captions uses an on-device AI model to recognize things like tone, volume, environmental cues, and human noises. For example, captions can now reflect the intensity of speech with capitalization so you'll know when someone is shouting "HAPPY BIRTHDAY!" instead of whispering it. They can also show when someone is sighing or gasping so you can make out the tone of someone's speech. Finally, they can tell you when people are applauding or cheering so you know what's happening in the environment.

Here are some examples of what captions can look like when the Expressive Captions feature is turned on versus when it's off.

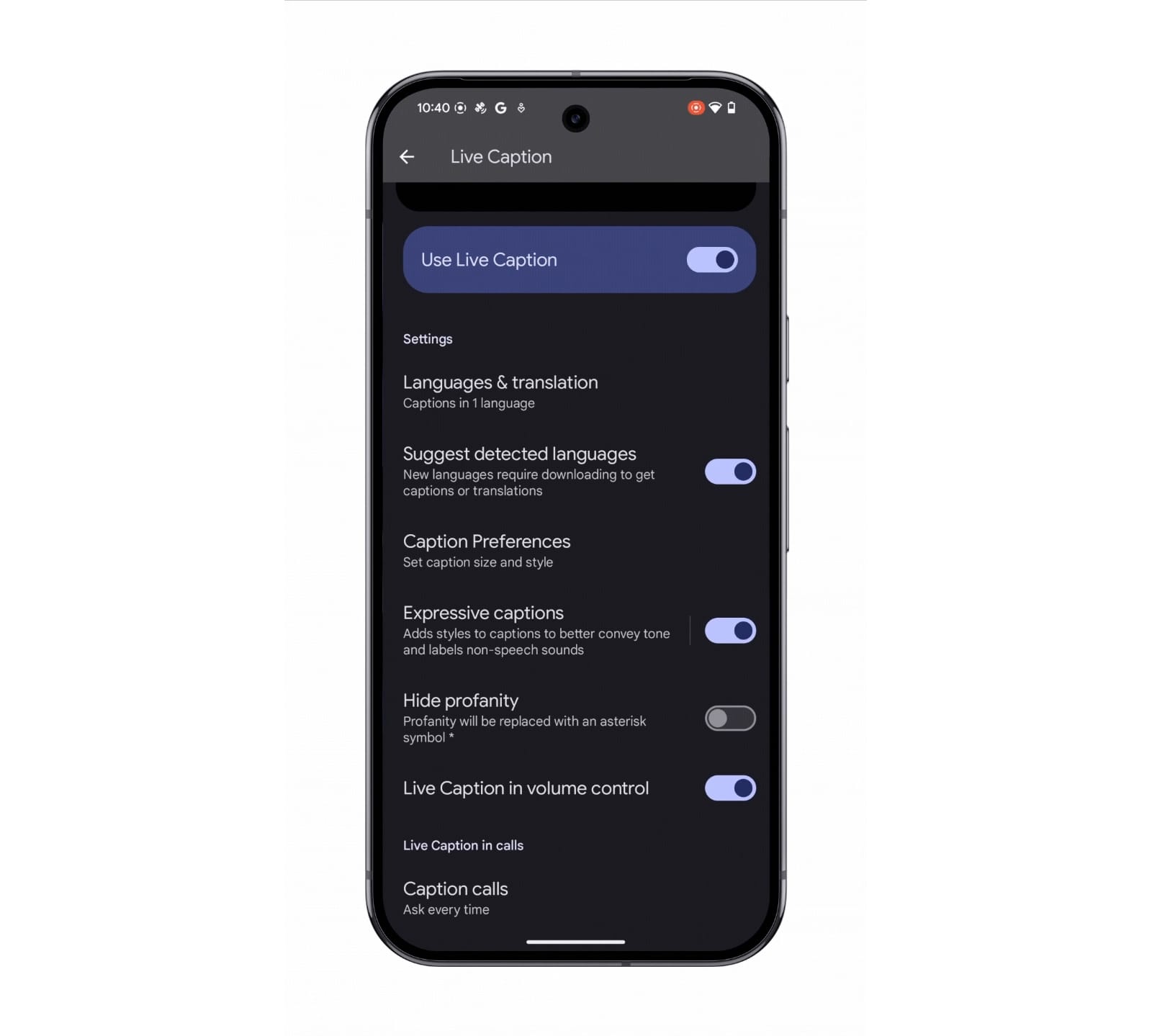

Because the Expressive Captions feature is part of Live Caption, it'll be available on many Android phones that support the Live Caption feature, not just Pixel phones getting the latest Pixel Drop update. However, it currently only supports devices in the U.S. running Android 14 or later and only works with English language media. To enable the feature, simply toggle "Expressive captions" in Live Caption settings, as shown below.

Once enabled, Expressive Captions will work with most content you watch or listen to, excluding phone calls and Netflix content. They'll even work in real-time when you're offline.

Google says its Android and DeepMind teams worked together to "understand how we engage with content on our devices without sound." Expressive Captions uses "multiple AI models" to not only capture spoken words but also translate them into stylized captions while also providing labels for an even wider range of background sounds.

Angana Ghosh, the Director of Product Management on Android Input and Accessibility, sat down with Jason Howell and myself on the Android Faithful podcast to dive a bit deeper into how the company created the Expressive Captions feature.

You can listen to our full 18 minute interview with Ghosh above, where she answered the following questions: