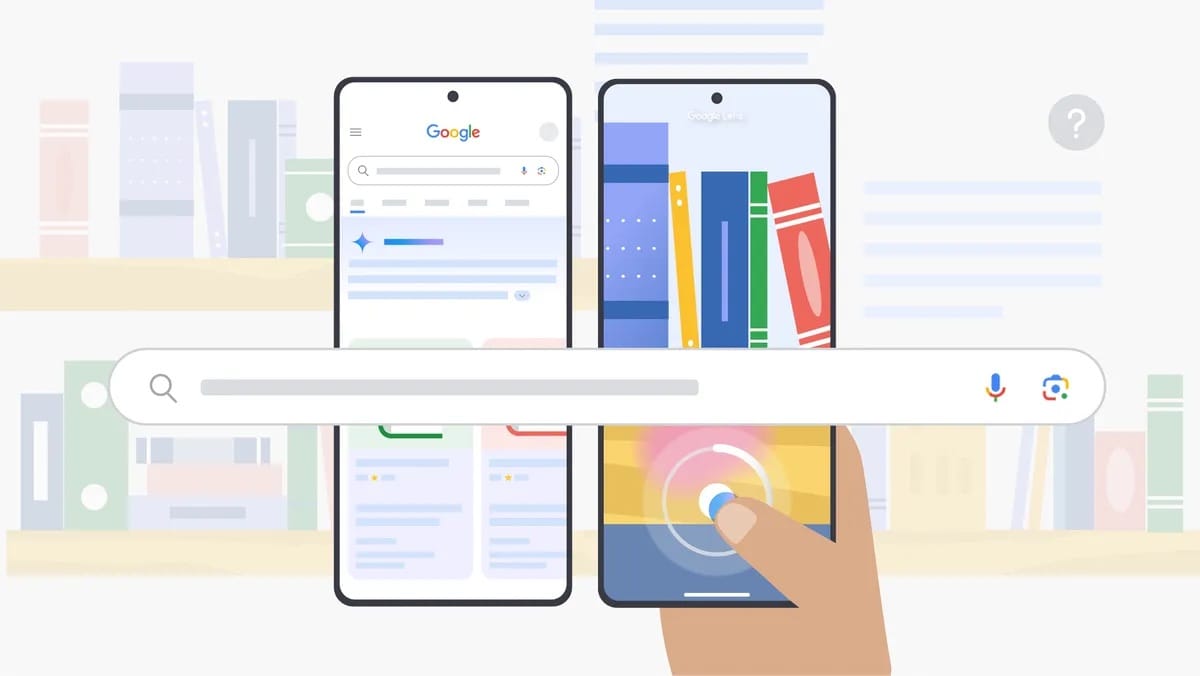

Google Search gets another AI boost with multimodal and Lens support for AI Mode Search.

Get ready to flex those Google Search app muscles because something seriously cool was just announced by Google. Google's AI Mode is leveling up with some mind-blowing multimodal search capabilities that'll make your inner geek squeal with delight!

If you haven't been playing with AI Mode yet (available to Google One AI Premium subscribers), you're missing out on what might be the future of search. Think of it as Google Search with a PhD in understanding what you actually want. People have been loving its clean, distraction-free interface and lightning-fast responses that actually get what you're asking.

Here's what makes it special: users are typing queries twice as long as regular Google searches. Instead of "best phones 2025," they're asking "What's the best Android phone under $700 with at least 12GB RAM and the best camera for low-light photography?" See the difference? We're getting conversational with our searches!

Now here's where Android users should get excited—Google is bringing Lens capabilities directly into AI Mode. This isn't just slapping two features together; it's a genuine technological marvel that combines:

Imagine you're browsing a tech store and spot an unfamiliar gadget. Just snap a photo, ask "What can this do and is it compatible with my Pixel?" and watch the magic happen!

Let's break down what's happening behind the scenes (because as Android enthusiasts, we know you love the technical details):

Gemini (Google's advanced AI model) analyzes your entire image—not just identifying objects but understanding their relationships, characteristics, and context. It's like having a friend with photographic memory and encyclopedic knowledge examining the image.

Google Lens technology kicks in to pinpoint specific items in the frame with remarkable accuracy—whether it's identifying the exact model of a smartphone or distinguishing between similar-looking components.

Here's where it gets really interesting! Using what Google calls a "query fan-out technique," the system generates multiple search queries about both the entire image and individual elements within it. Imagine instantly running dozens of specialized searches that a search expert might think to try.

All this information gets compiled, filtered, and presented in a coherent, conversational response that directly addresses your question—complete with helpful links for diving deeper.

Picture this scenario that'll resonate with any Android enthusiast:

You're debating between several Android phones at a store. Snap a photo of the display, ask "Which of these has the best battery life and camera system for my needs?" and AI Mode will:

Or imagine scanning your cluttered tech drawer and asking, "Which of these cables will charge my Pixel the fastest?" The system will identify USB-C PD capabilities, wattage ratings, and more!

The awesome news is that Google is expanding access to millions more Labs users in the U.S. Android users can jump into this futuristic search experience right now by downloading or updating the Google app on your device.

Open the app, look for the AI Mode option, and start experimenting with image+text queries. The more we all use it and provide feedback, the smarter it'll get—that's how we Android enthusiasts help shape the future of tech!

What image will you search with first? A mysterious circuit board? Your collection of Android Bugdroid figures? That strange adapter you found at the bottom of your tech box? The possibilities are endless, and we can't wait to hear how you're using this powerful new tool!