Google's AI assistant levels up with new tools that might just make you forget about your other apps and make coding MUCH easier.

Google has just dropped some exciting new Gemini features that could change how you work with AI on a daily basis. Announced today for Gemini, two major additions to its AI assistant: Canvas and Audio Overview. Let's dive into what these new tools can do and why Android users might want to take notice.

Remember how you used to bounce between apps to draft documents, edit them, and then share them? Google's new Canvas feature aims to simplify that workflow by creating an interactive space right within Gemini where you can create and refine content in real-time.

Canvas isn't just another text editor—it's designed to be a true collaboration space between you and your AI assistant. You simply select "Canvas" in the prompt bar, and you're ready to start writing and editing with Gemini's help. The changes appear instantly, creating a smooth back-and-forth creative process.

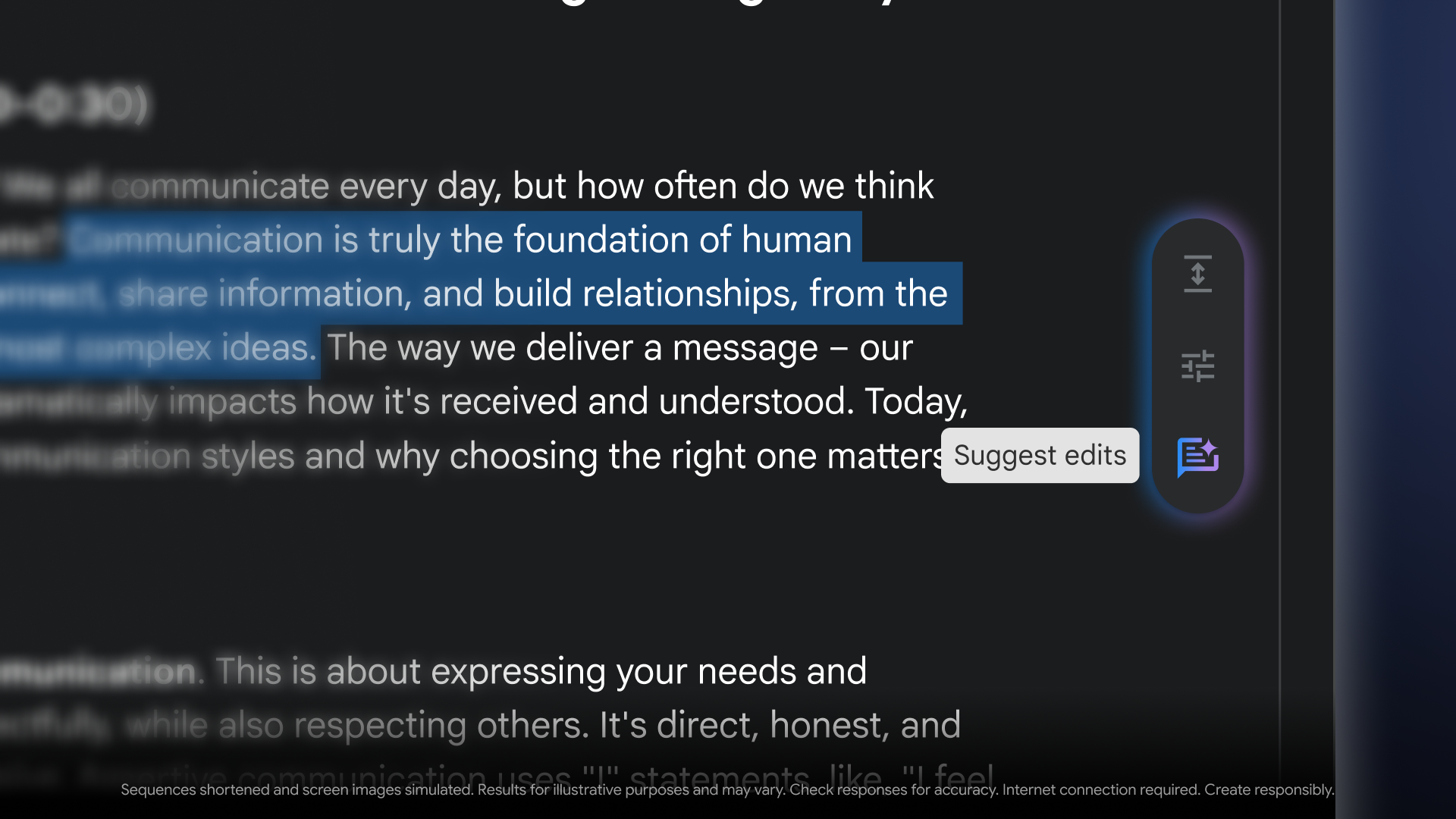

What makes Canvas particularly interesting is how it handles editing. You can highlight specific sections of text and ask Gemini to adjust the tone, length, or formatting. Need that paragraph to sound more professional? Want to make your conclusion more concise? Just ask, and Gemini will suggest edits on the fly. When you're finished, you can export your creation to Google Docs with a single click if you want to collaborate with human teammates.

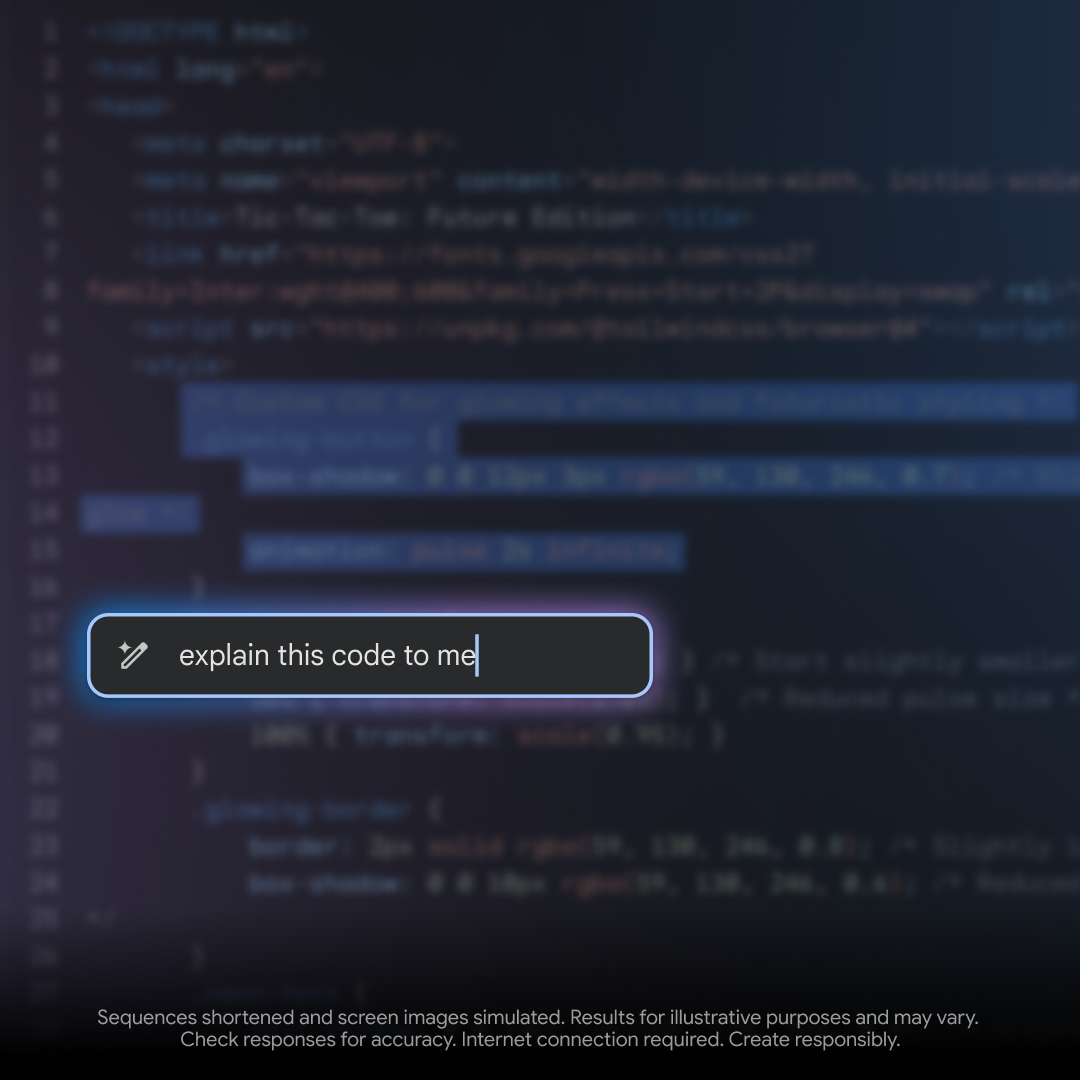

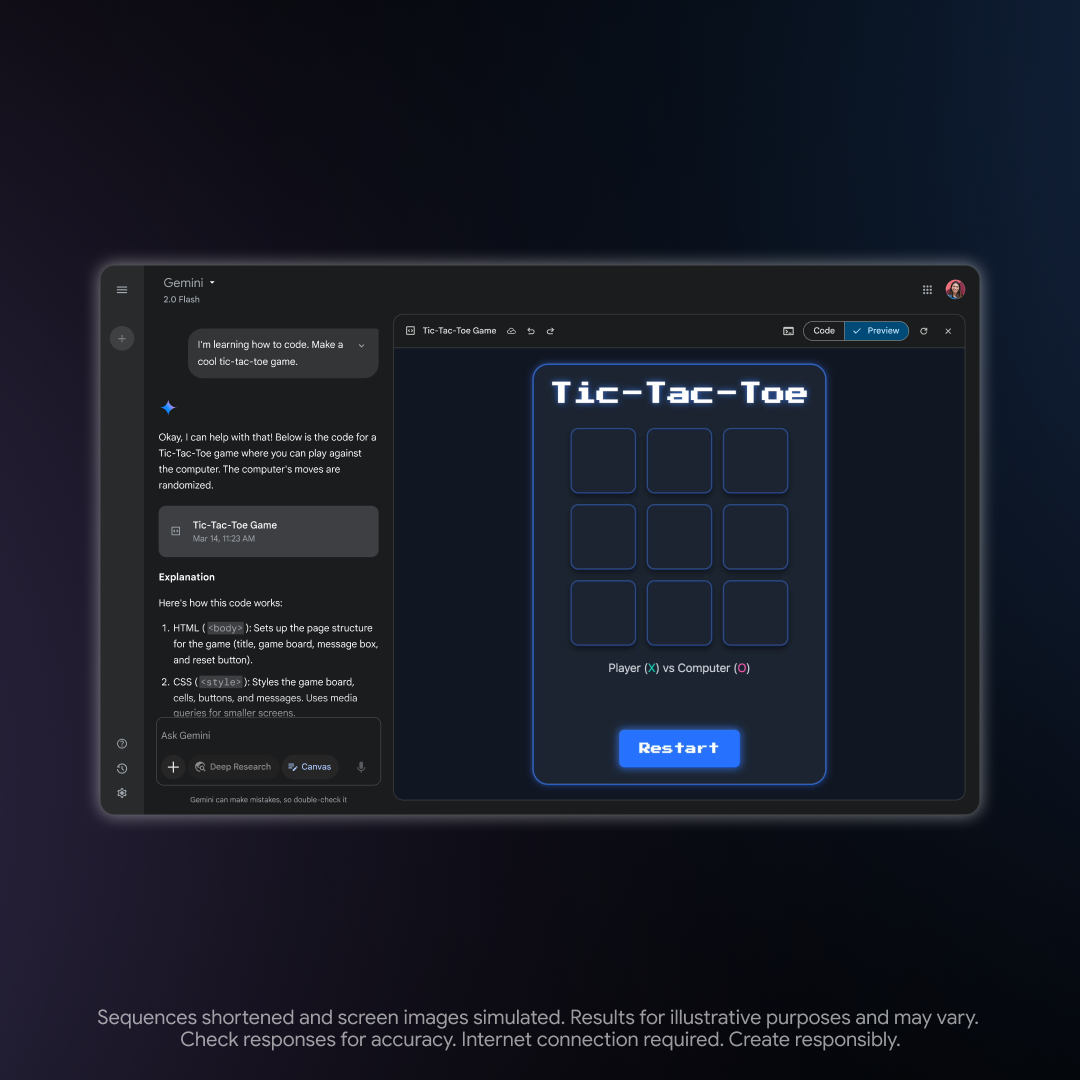

For the developers among us, Canvas offers something even more enticing. It streamlines the process of turning your coding ideas into functional prototypes. Whether you're working on web apps, Python scripts, games, or other interactive applications, Canvas provides a space where you can generate code and immediately see how it works.

One of the standout features for web developers is the ability to preview HTML/React code in real-time. Imagine you're building an email subscription form for your website. You can ask Gemini to generate the HTML, then immediately see how it would look and function. Want to tweak the design? Just ask for changes to input fields or add call-to-action buttons, and the preview updates instantly.

This feature essentially eliminates the need to switch between your code editor, browser, and other tools during the prototyping phase. It's all right there in Canvas. (Though it's worth noting that the code preview feature isn't available on mobile yet.)

If Canvas is about creation, Audio Overview is about consumption—specifically, consuming complex information in a more engaging way. This feature, which was previously available in Google's NotebookLM, transforms your documents, slides, and research reports into podcast-style audio discussions.

Here's how it works: You upload your files, click the suggestion chip that appears above the prompt bar, and Gemini creates a conversation between two AI hosts who discuss your content. These virtual hosts summarize the material, connect different topics, and offer unique perspectives through a dynamic back-and-forth conversation.

This feature feels particularly useful for absorbing information while multitasking. Imagine uploading your class notes, research papers, or lengthy email threads and getting an audio summary you can listen to during your commute or workout. The discussions are accessible on both web and mobile, and you can easily share or download them for on-the-go listening.

Currently, Audio Overview is available in English only, though Google promises more languages are coming soon.

Both Canvas and Audio Overview are rolling out globally starting today for Gemini and Gemini Advanced subscribers. Canvas is available in all languages that support Gemini Apps, while Audio Overview is currently limited to English.

To try Canvas, simply select it from the prompt bar in your Gemini interface. For Audio Overview, upload documents or slides and look for the suggestion chip that appears.

With these new features, Google is clearly positioning Gemini as more than just a chatbot—it's becoming a full-fledged creative and productivity assistant. Canvas, in particular, seems poised to challenge dedicated document and code editors by integrating AI assistance directly into the creation process.

After getting access to Canvas in Gemini, I did a test run where I asked it to help me code a simple Android App. Within minutes, not only did I get lines of code, but the instructions on what to do in Android Studio to get it configured and running. It was quite mind blowing how easy it was.

For Android users who are already invested in the Google ecosystem, these tools offer a compelling reason to give Gemini a closer look, especially if you regularly work with documents or code. The seamless integration with Google Docs is particularly noteworthy for collaborative workflows.

The real question is whether these features will be enough to lure users away from standalone coding environments or document editors they've grown accustomed to. For casual users or those just getting started with coding, the all-in-one approach of Canvas could prove incredibly valuable.

Have you tried these new Gemini features yet? Let us know your thoughts in the comments below!

For more information, visit: https://gemini.google/overview/canvas/